Schedule

Friday, 18th March 2016 |

|||

| 08:45 - 09:00 | Opening | ||

| 09:00 - 10:00 | Keynote (Moustafa Youssef: A Decade Later - Challenges: Device-free Passive Localization for Wireless Environments) | ||

| 10:00 - 10:30 | Morning coffee break | ||

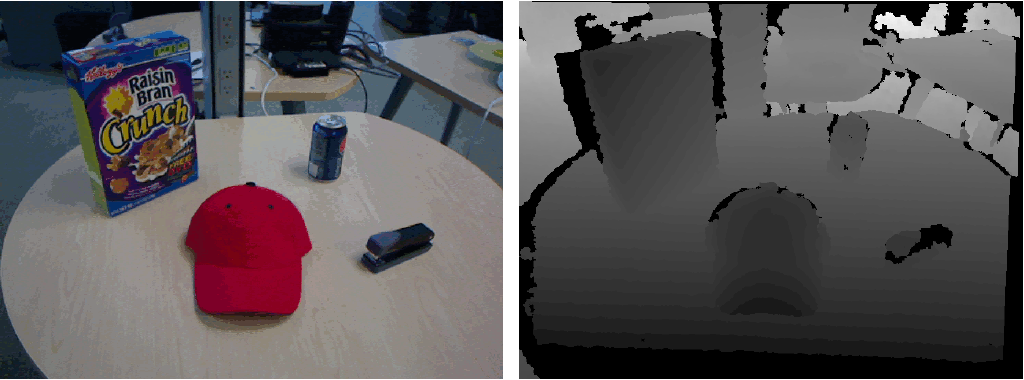

| 10:30 - 12:00 | Session (Depth-, sound-, and environmental sensors)Henry Zhong; Salil S Kanhere; Chun Tung Chou  Pervasive computing applications have long used contact free object detection to process images collected from smartphone, tablet and wearable cameras. Two key challenges encountered in uncontrolled environments are the detection accuracy and speed, more so on computationally limited em- bedded systems. Recent technological advances have led to the emergence of depth sensors being integrated in a variety of embedded devices such as smartphones and wearables. Depth sensors are contact free and avoid some of the pitfalls caused by variations in lighting and background conditions that are typically associated with images from conventional cameras. This paper presents QuickFind, a fast and lightweight algorithm for objection detection using only depth data. QuickFind is fast and particularly suited to run on embedded platforms as it uses a quick method for segmentation and makes use of low overhead features. We demonstrate empirically the performance of QuickFind by benchmarking on two embedded systems: Raspberry Pi and Intel Edison. We also demonstrate the wide applicability of QuickFind by developing two proof-of-concept pervasive computing applications.

Pervasive computing applications have long used contact free object detection to process images collected from smartphone, tablet and wearable cameras. Two key challenges encountered in uncontrolled environments are the detection accuracy and speed, more so on computationally limited em- bedded systems. Recent technological advances have led to the emergence of depth sensors being integrated in a variety of embedded devices such as smartphones and wearables. Depth sensors are contact free and avoid some of the pitfalls caused by variations in lighting and background conditions that are typically associated with images from conventional cameras. This paper presents QuickFind, a fast and lightweight algorithm for objection detection using only depth data. QuickFind is fast and particularly suited to run on embedded platforms as it uses a quick method for segmentation and makes use of low overhead features. We demonstrate empirically the performance of QuickFind by benchmarking on two embedded systems: Raspberry Pi and Intel Edison. We also demonstrate the wide applicability of QuickFind by developing two proof-of-concept pervasive computing applications.

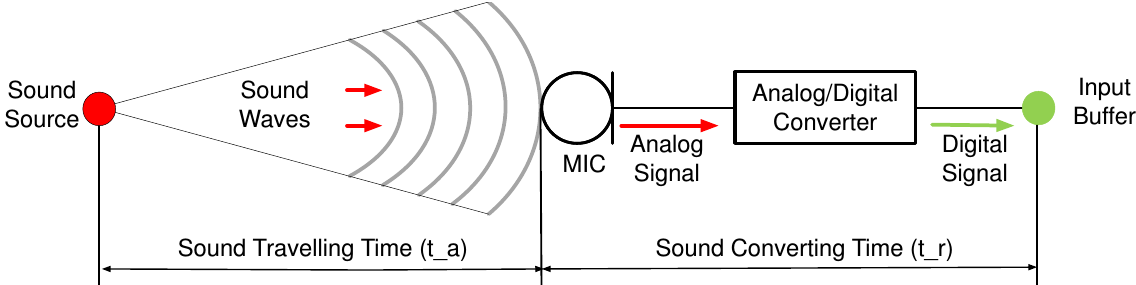

Duc Le; Jacob Kamminga; Hans Scholten; Paul Havinga  The proliferation of smartphones nowadays has enabled many crowd assisted applications including audio-based sensing. In such applications, detected sound sources are meaningless without location information. However, it is challenging to localize sound sources accurately in a crowd using only microphones integrated in smartphones without existing infrastructures, such as dedicated microphone sensor systems. The main reason is that a smartphone is a nondeterministic platform that produces large and unpredictable variance in data measurements. Most existing localization methods are deterministic algorithms that are ill suited or cannot be applied to sound source localization using only smartphones. In this paper, we propose a distributed localization scheme using nondeterministic algorithms. We use the multiple possible outcomes of nondeterministic algorithms to weed out the effect of outliers in data measurements and improve the accuracy of sound localization. We then proposed to optimize the cost function using least absolution deviations (LAD) rather than least squares (OLS) to lessen the influence of the outliers. To evaluate our proposal, we conduct a testbed experiment with a set of 16 Android devices and $9$ sound sources. The experiment results show that our nondeterministic localization algorithm achieves a root mean square error (RMSE) of 1.19 m, which is close to the Cramer-Rao Bound (0.8 m). Meanwhile, the best RMSE of compared deterministic algorithms is 2.64 m.

The proliferation of smartphones nowadays has enabled many crowd assisted applications including audio-based sensing. In such applications, detected sound sources are meaningless without location information. However, it is challenging to localize sound sources accurately in a crowd using only microphones integrated in smartphones without existing infrastructures, such as dedicated microphone sensor systems. The main reason is that a smartphone is a nondeterministic platform that produces large and unpredictable variance in data measurements. Most existing localization methods are deterministic algorithms that are ill suited or cannot be applied to sound source localization using only smartphones. In this paper, we propose a distributed localization scheme using nondeterministic algorithms. We use the multiple possible outcomes of nondeterministic algorithms to weed out the effect of outliers in data measurements and improve the accuracy of sound localization. We then proposed to optimize the cost function using least absolution deviations (LAD) rather than least squares (OLS) to lessen the influence of the outliers. To evaluate our proposal, we conduct a testbed experiment with a set of 16 Android devices and $9$ sound sources. The experiment results show that our nondeterministic localization algorithm achieves a root mean square error (RMSE) of 1.19 m, which is close to the Cramer-Rao Bound (0.8 m). Meanwhile, the best RMSE of compared deterministic algorithms is 2.64 m.

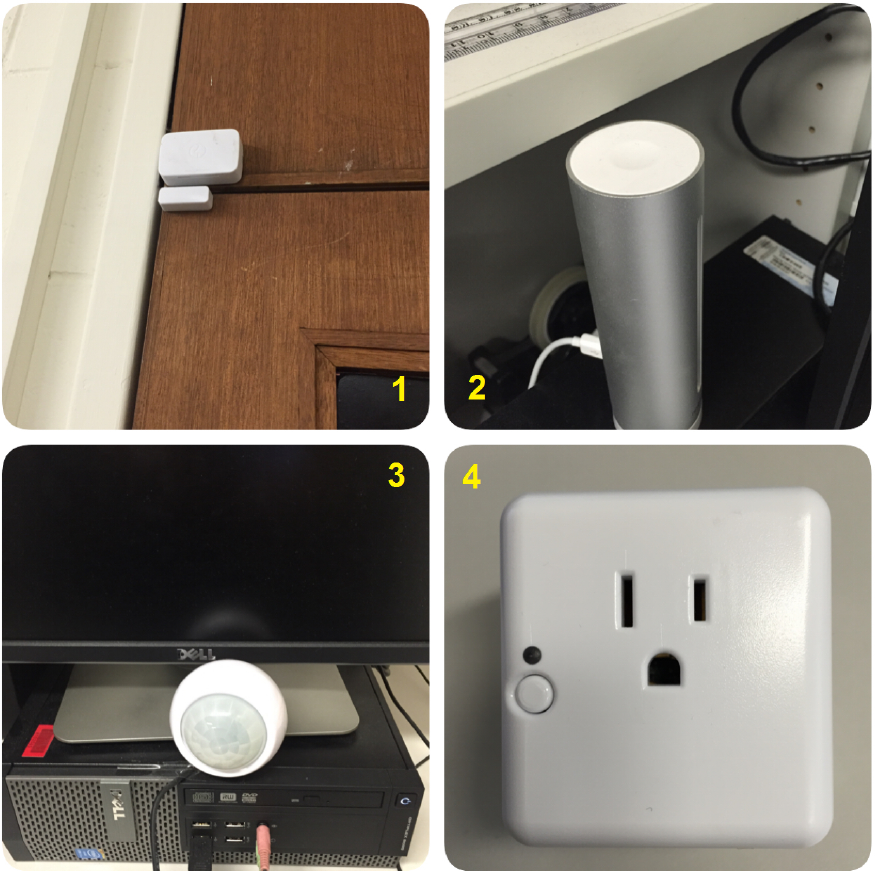

Irvan Arief Ang; Flora D Salim; Margaret Hamilton  With advancement in sensors and Internet of Things, gathering spatiotemporal information from one's surroundings has become easier. To an extent, we can start to use sensor data to infer indoor occupancy patterns. This paper aims to identify which ambient sensors are the most dominant in recognising human presence. We deployed four different types of off-the-shelf sensors from two manufacturers to ensure we could collect the following data reliably: illumination, temperature, humidity, levels of carbon dioxide, pressure and sound from within one staff office. We also collected motion, power consumption, door opening and closing data and annotations from a self-developed mobile app as ground truth. We present our methods to preprocess the data and compute the number of people in the room with different classifiers, and identify sensors with strong and weak correlations. We explain our methodology for integrating large amounts of sensor data, discuss our experiments and findings in relation to the binary occupancy of a single person office, providing a baseline for recognising human occupancy.

With advancement in sensors and Internet of Things, gathering spatiotemporal information from one's surroundings has become easier. To an extent, we can start to use sensor data to infer indoor occupancy patterns. This paper aims to identify which ambient sensors are the most dominant in recognising human presence. We deployed four different types of off-the-shelf sensors from two manufacturers to ensure we could collect the following data reliably: illumination, temperature, humidity, levels of carbon dioxide, pressure and sound from within one staff office. We also collected motion, power consumption, door opening and closing data and annotations from a self-developed mobile app as ground truth. We present our methods to preprocess the data and compute the number of people in the room with different classifiers, and identify sensors with strong and weak correlations. We explain our methodology for integrating large amounts of sensor data, discuss our experiments and findings in relation to the binary occupancy of a single person office, providing a baseline for recognising human occupancy.

|

||

| 12:00 - 13:00 | Lunch break | ||

| 13:00 - 14:00 | Invited talk by Sourav Bhattacharya (slides)

(details)

Enabling Efficient Deep Learning Inference on Mobile Devices

Enabling Efficient Deep Learning Inference on Mobile DevicesBreakthroughs from the field of deep learning are radically changing how sensor data are interpreted to extract high-level information needed by mobile apps. It is critical that the gains in inference accuracy that deep models afford become embedded in the future generations of mobile apps. However, significant requirements of memory and computational power have been the main bottlenecks in the wide scale adoption of these novel computational techniques on resource constrained wearable and mobile platforms. In this talk we present novel design principles that significantly lower the device resources (viz. memory, computation, energy) required by deep learning to overcome severe challenges to their mobile adoption. The main foundations of our approach are based on resource control algorithms and runtime resource scaling of deep model architectures, which are designed for the inference stage. Experiments show that the proposed optimizations allow even large-scale deep learning models to execute efficiently on modern mobile processors and significantly outperform existing solutions, such as cloud-based offloading. | ||

| 14:00 - 15:00 | Session (RF-based sensing)

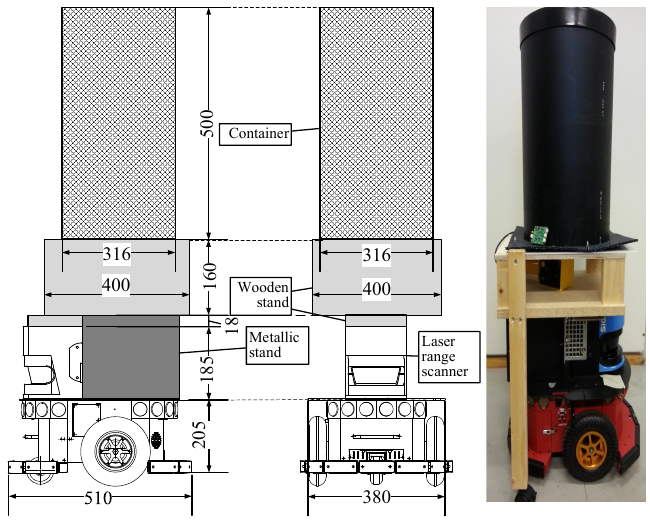

Huseyin Yigitler; Riku Jäntti  The received signal strength based radio tomographic imaging recently gained momentum as unobtrusive localization method. The localization accuracy performance of these methods require complex experiment campaigns. In particular, the performance dependence on test subject parameters should be considered. In this work, we introduce a radio tomographic imaging experimentation system using an autonomously navigating robot. The robot is equipped with a cylindrical container filled human tissue simulating liquid. We use this system to assess localization accuracy of different imaging methods. The results suggest that the first method appeared, known as network shadowing model, performs as good as preceding methods with additional advantage of simplicity.

The received signal strength based radio tomographic imaging recently gained momentum as unobtrusive localization method. The localization accuracy performance of these methods require complex experiment campaigns. In particular, the performance dependence on test subject parameters should be considered. In this work, we introduce a radio tomographic imaging experimentation system using an autonomously navigating robot. The robot is equipped with a cylindrical container filled human tissue simulating liquid. We use this system to assess localization accuracy of different imaging methods. The results suggest that the first method appeared, known as network shadowing model, performs as good as preceding methods with additional advantage of simplicity.

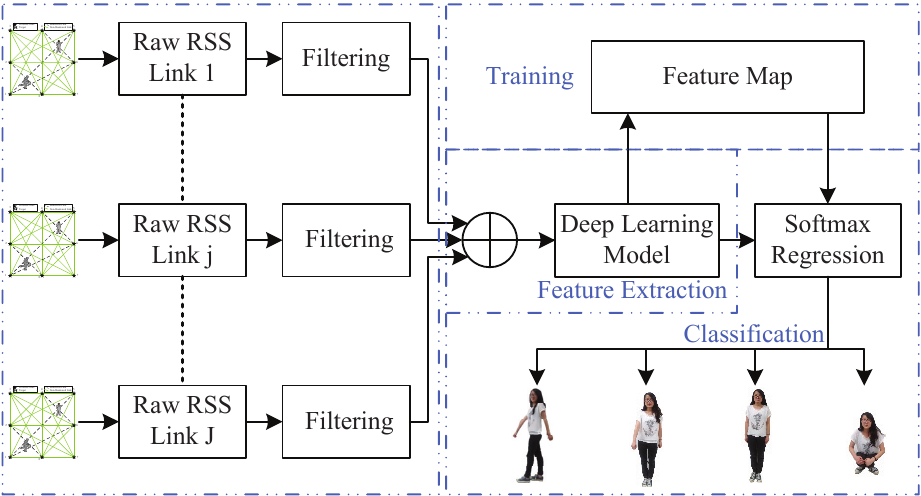

Xiao Zhang; Jie Wang; Qinghua Gao; Xiaorui Ma; Hongyu Wang  Recent advance in device-free wireless localization and activity recognition (DFLAR) technique has made it possible to acquire context information of the target without its participation. This novel technique has great potential for lots of applications, e.g., smart space, smart home, and security safeguard. One fundamental question of DFLAR is how to design discriminative features to characterize the raw wireless signal. Existing works manually design handcraft features, e.g., mean and variance of the raw signal, which is not universal for different activities. Inspired by the deep learning theory, we explore to learn universal and discriminative features automatically with a deep learning model. By merging the learned new features into a softmax regression based machine learning framework, we develop a deep learning based DFLAR system. Experimental evaluations with an 8 wireless nodes testbed confirms that compared with traditional handcraft features, DFLAR system with the learned features could achieve better performance.

Recent advance in device-free wireless localization and activity recognition (DFLAR) technique has made it possible to acquire context information of the target without its participation. This novel technique has great potential for lots of applications, e.g., smart space, smart home, and security safeguard. One fundamental question of DFLAR is how to design discriminative features to characterize the raw wireless signal. Existing works manually design handcraft features, e.g., mean and variance of the raw signal, which is not universal for different activities. Inspired by the deep learning theory, we explore to learn universal and discriminative features automatically with a deep learning model. By merging the learned new features into a softmax regression based machine learning framework, we develop a deep learning based DFLAR system. Experimental evaluations with an 8 wireless nodes testbed confirms that compared with traditional handcraft features, DFLAR system with the learned features could achieve better performance.

| ||

| 15:00 - 15:30 | Afternoon coffee break | ||

| 15:30 - 16:00 | Session (RF-based sensing)

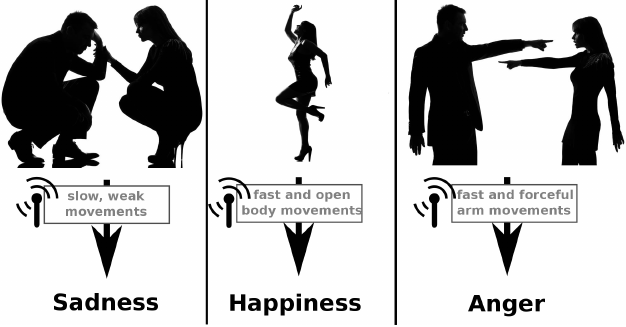

Muneeba Raja; Stephan Sigg  Human emotion recognition has attracted a lot of research in recent years. However, conventional methods for sensing human emotions are either expensive or privacy intrusive. In this paper, we explore a connection between emotion recognition and RF-based activity recognition that can lead to a novel ubiquitous emotion sensing technology. We discuss the latest literature from both domains, highlight the potential of body movements for accurate emotion detection and focus on how emotion recognition could be done using inexpensive, less privacy intrusive, device-free RF sensing methods. Applications include environment and crowd behaviour tracking in real time, assisted living, health monitoring, or also domestic appliance control. As a result of this survey, we propose RF-based device free recognition for emotion detection based on body movements. However, it requires overcoming challenges, such as accuracy, to outperform classical methods.

Human emotion recognition has attracted a lot of research in recent years. However, conventional methods for sensing human emotions are either expensive or privacy intrusive. In this paper, we explore a connection between emotion recognition and RF-based activity recognition that can lead to a novel ubiquitous emotion sensing technology. We discuss the latest literature from both domains, highlight the potential of body movements for accurate emotion detection and focus on how emotion recognition could be done using inexpensive, less privacy intrusive, device-free RF sensing methods. Applications include environment and crowd behaviour tracking in real time, assisted living, health monitoring, or also domestic appliance control. As a result of this survey, we propose RF-based device free recognition for emotion detection based on body movements. However, it requires overcoming challenges, such as accuracy, to outperform classical methods.

| ||

| 16:00 | Closing | ||